Embedded vision is considered one top-tier, fast-growing markets. Embedded vision refers to the deployment of visual capabilities to embedded systems for intelligent and understanding of 2D/3D visual scenes. It covers a variety of rapidly growing markets and applications. Examples are Advanced Driver Assistance System (ADAS), industrial vision, video surveillance and robotics. With the industry movement toward ubiquitous vision computing, vision capabilities and visual analysis will become an inherent part of many embedded platforms. Embedded vision technology would be a pioneer market in digital signal processing.

While vision computing is already a strong research focus, embedded deployment of vision algorithms is still in a relatively early stage. Vision computing increases the demand for extremely high performance, coupled with very little power and desire for a low cost. Embedded vision platforms must offer extreme compute performance while consuming very little power (often less than 1 W) and still be sufficiently programmable. The conflicting goals (high performance, low power) imposes massive challenges in architecting embedded vision platforms.

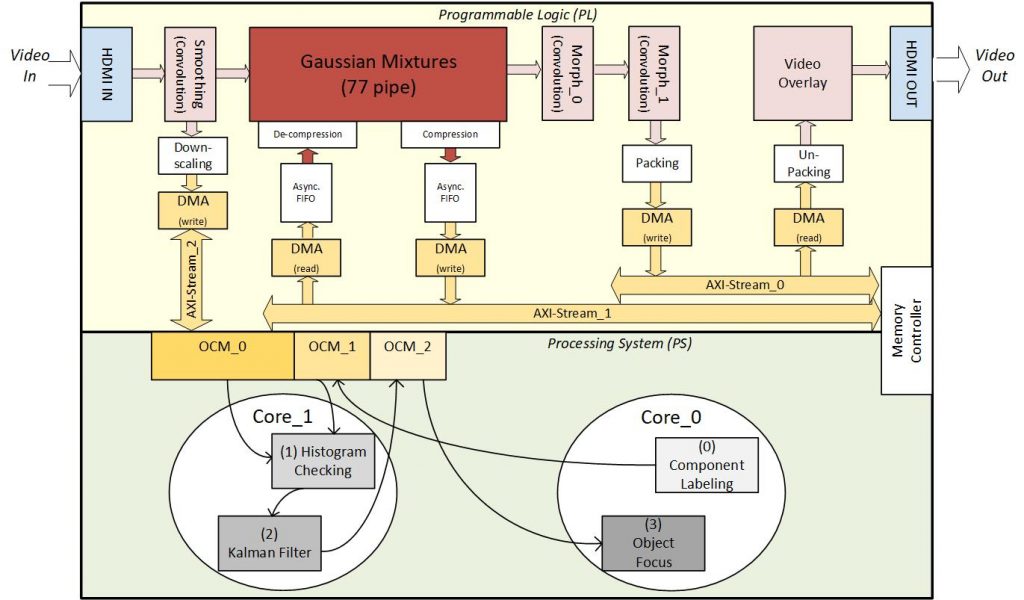

The aim is to architect / design and implement customized vision processors that can deliver power efficiency and performance at the same time. The primary approach is a separation between streaming and algorithm-intrinsic traffic. Streaming traffic is communication for accessing data under processing (e.g. image pixels, data samples) – inputs/outputs of the algorithm. Conversely, algorithm-intrinsic traffic is a communication for accessing the data required by the algorithm itself (algorithm-intrinsic data). The traffic separation allows customizing algorithm-intrinsic traffic for a quality/bandwidth trade-off and reducing the traffic with respect to algorithm quality. Furthermore, the traffic separation is a significant step toward efficient chaining of multiple vision algorithms constructing a complete vision flow. As an example, our Zynq-prototyped solution performs 40GOPs at 1.7Watts of on-chip power for a complete object detection/tracking vision flow.